The team at ResourceSpace have been a joy to work with, helping us manage what could've been a really difficult transition every step of the way.

Blog

18th March 2024

The era of generative AI has very much arrived, and with it has come a number of challenges for businesses and society as a whole, but the machine learning application that potentially has the most serious implications is ‘deepfakes’.

Regardless of the size of your business or the sector it operates in, this latest digital threat needs to be understood and taken seriously. But what are deepfakes, and how can a Digital Asset Management system help your organisation protect itself from the potential risks?

READ MORE: What's in store for the Digital Asset Management industry in 2024?

A ‘deepfake’ is an image or video that’s been digitally manipulated using artificial intelligence and machine learning, typically to change someone’s likeness or voice to appear to be someone else.

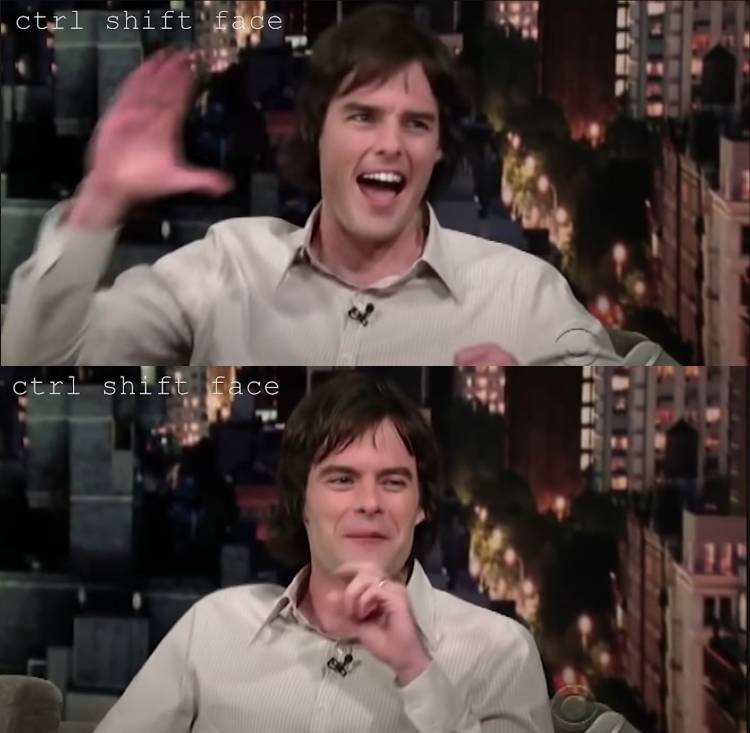

Some high profile examples of this include Mark Zuckerberg bragging about having “total control of billions of people’s stolen data”, Jon Snow apologising for Season 8 of Game of Thrones, and an enhanced Tom Cruise impression by actor and comedian Bill Hader.

Deepfake technology was used to change actor Bill Hader into Tom Cruise

Deepfake technology doesn’t work like photo editing software. It uses two competing algorithms called a ‘generator’ and ‘discriminator’, that form a generative adversarial network (GAN). The generator creates the fake digital media and asks the discriminator if it can tell if the content is artificial. If it can, this information is passed back to the generator so it can be improved.

Although deepfakes can be used for entertainment, like in the examples above, they can also be used maliciously, and it’s something your organisation should be aware of.

It’s still early days for the development of deepfake technology, and many examples of manipulated media circulating on social media are crudely executed and inauthentic. However, there’s been a number of deepfakes released over the last couple of years that are convincing and difficult to identify—and the technology is only going to get more sophisticated.

Public concern around deepfakes has centred around its potential for spreading disinformation in an attempt to influence the public, whether the aim is to cause confusion and uncertainty, or civil unrest. However, this synthetic media poses a number of threats to organisations too.

From damage to brand reputation and customer trust, through to financial consequences, the potential implications of AI deepfakes for organisations and institutions are massive and wide-ranging.

For example, companies could become the victims of fraud as a result of deepfakes, as happened to a UK-based CEO in 2019. The executive of the unnamed UK-based energy firm thought he was speaking to his boss when he was asked to immediately transfer €220,000 to a specified bank account.

Companies could also be exposed to issues arising from regulatory compliance issues if employees unknowingly share manipulated images or videos leading to defamation or privacy violations.

Fortunately, there are some tell-tale signs that digital media is a deepfake, so it’s worth knowing what to look out for when you see something that doesn’t look right or is potentially damaging:

In order to guard against these risks, businesses should implement training and processes that make it less likely a deepfake-inspired data hack attempt is successful, and invest in systems that can act as a single source of truth for the organisation’s digital media.

Despite there being a number of visual clues that an image or video has been manipulated, they can still be incredibly hard to spot. With that in mind, the secret to identifying a deepfake isn’t in what you see, but where you find it.

This is where a secure Digital Asset Management system comes in. A DAM acts as a single source of truth for all of your digital media, with a DAM Manager closely guarding what is uploaded and accessible to an organisation’s employees. This can include all brand assets, sales and marketing collateral, as well as promotional video content.

A DAM will ensure the integrity of every single digital asset, as well as verifying them and making sure they can be traced back to the original source. In the future, the combination of DAM with AI technology could even help to mitigate the risks posed by deepfakes, with AI-based content authentication making it much less likely synthetic media will be uploaded to the system.

READ MORE: How ResourceSpace is leveraging AI

Do you want to find out more about how ResourceSpace can act as the single source of truth for your digital assets? Request your free 30-minute demo, or click here to launch your free DAM portal within minutes.

#AI

#MachineLearning

#BrandProtection

#CyberSecurity

#IndustryNews

#GenerativeAI

#BrandReputation

#BestPractice

#ResourceSpaceTips