CLIP AI Smart Search

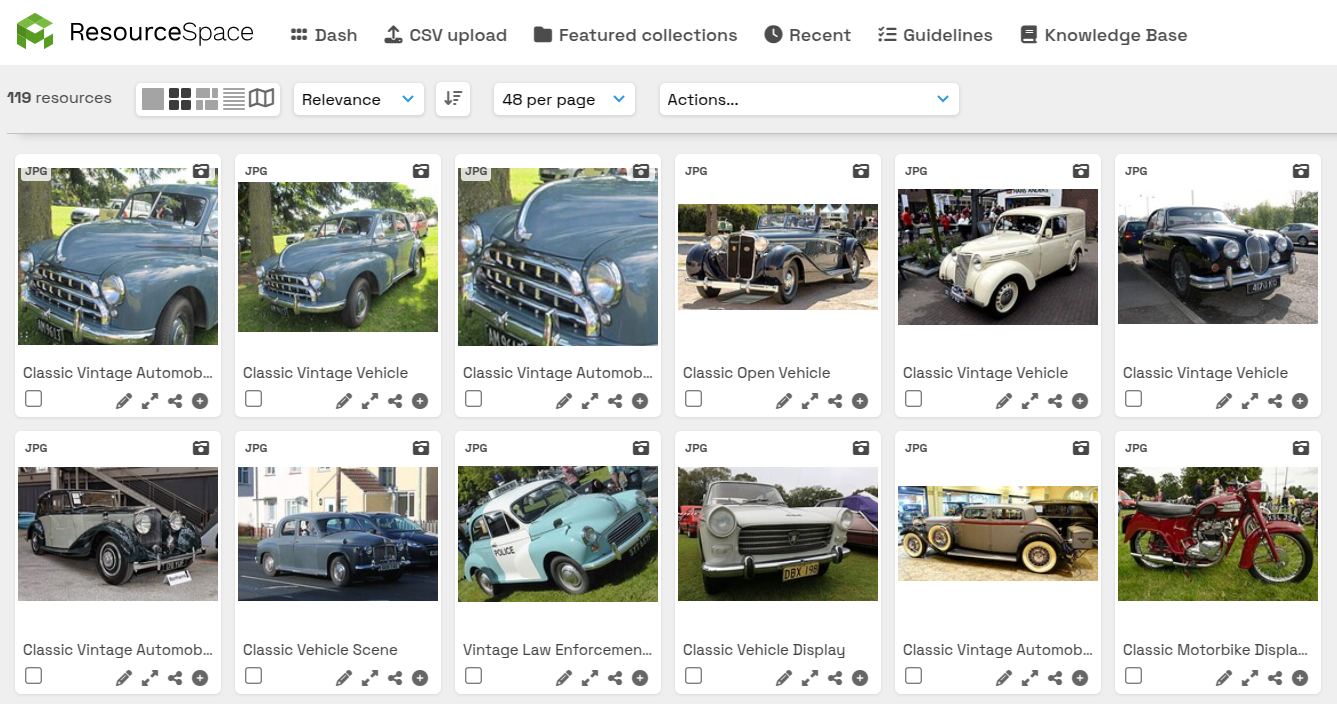

The CLIP Visual Search plugin integrates OpenAI's CLIP (Contrastive Language-Image Pretraining) model into ResourceSpace, allowing powerful visual similarity and natural language image searches based on actual image content rather than metadata.

Features

- Search ResourceSpace images using a natural language description (e.g., "red double-decker bus").

- Find visually similar images by uploading an example image.

- Find duplicates or near-duplicates based on visual similarity scores.

- Efficient searching powered by FAISS (Facebook AI Similarity Search) for fast nearest-neighbour lookups.

How it Works

The plugin generates a vector representation (512-dimensional) of each image when indexed. These vectors represent the visual and semantic features of the images. Searches work by comparing these vectors rather than relying on traditional metadata.

Usage

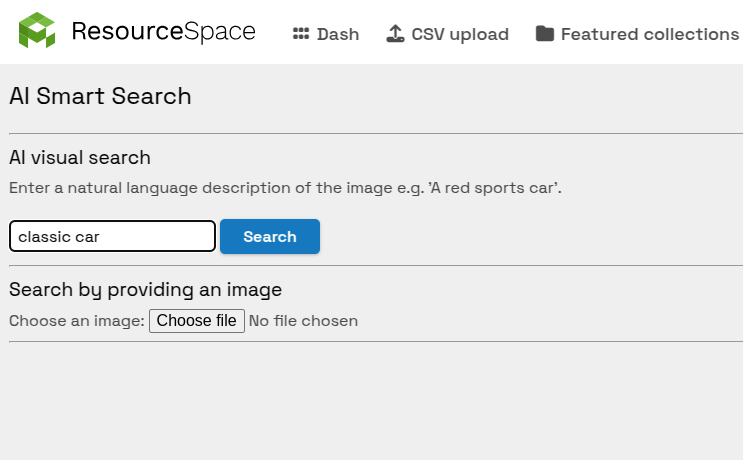

Natural Language Search

From "AI Smart Search" at the bottom of the right hand search bar, enter a description of the image you're looking for (e.g., "beach sunset with palm trees") and the plugin will return the most visually similar results from the archive. The option to perform a natural language search also appears as a button in the search bar for regular searches.

Image Upload Search

Again from "AI Smart Search" in the search bar, select an image from your computer. The plugin will automatically resize the image to 256x256 pixels on the client side, then submit the processed image for a visual similarity search. The original full-size file is not uploaded. This means you can search very rapidly for a matching image without having to wait for a large upload.

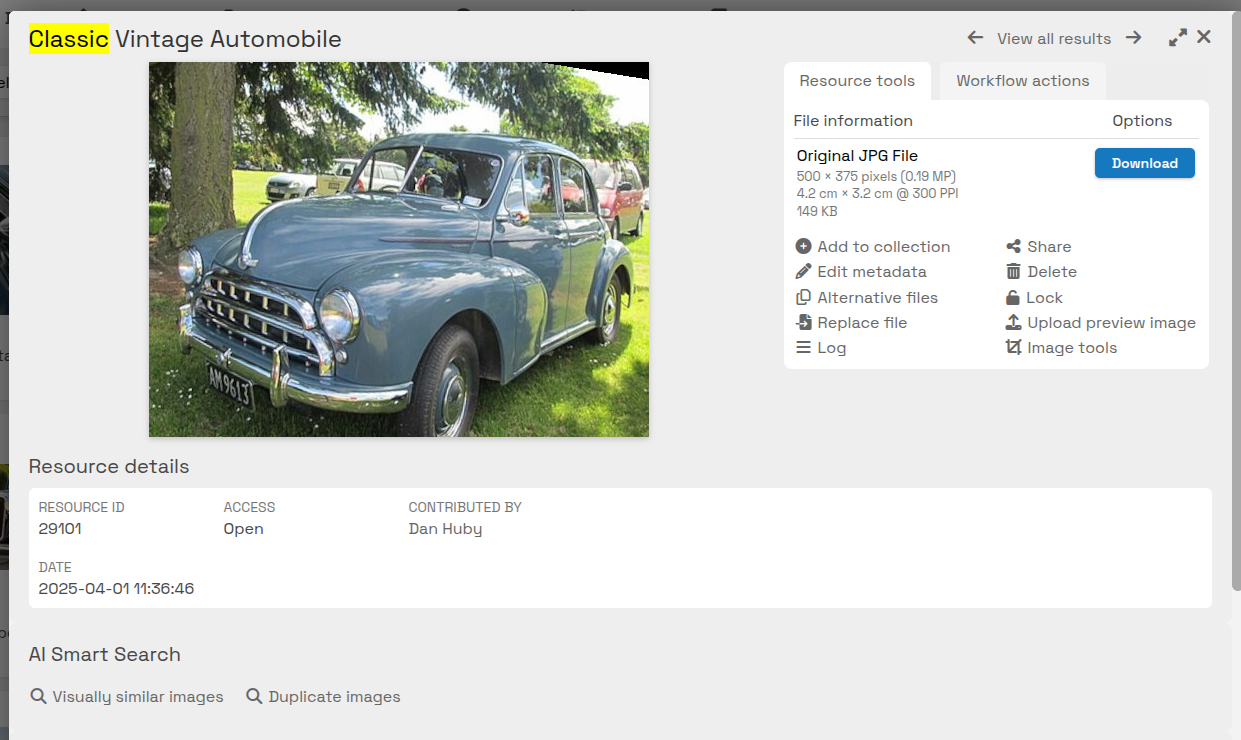

Find Similar Images

From a resource view page, use the "Visually Similar Images" option under the AI Smart Search heading to locate other resources in the system with a high visual similarity.

Setup

- Enable the CLIP plugin from Manage Plugins in ResourceSpace.

- Ensure the separate Python-based CLIP service is running. This service communicates with ResourceSpace over HTTP and handles vector generation and searching.

System Requirements

- Python 3.8+ installed on the server.

- FAISS library installed in the Python environment.

- Python virtual environment (recommended) to isolate dependencies.

Installation

Create a Python virtual environment:

python3 -m venv clip-env source clip-env/bin/activate

Install required Python packages:

pip install fastapi uvicorn torch torchvision ftfy regex tqdmpip install git+https://github.com/openai/CLIP.gitpip install mysql-connector-pythonpip install python-multipartpip install faiss-cpu

pip install requests

Start the CLIP service:

cd /path/to/resourcespace/plugins/clip/scriptspython clip_service.py --dbuser [mysql_user] --dbpass [mysql_password]

You should see:

Loading CLIP model...Model loaded.

The service will now be running on http://localhost:8000

Run:

php /plugins/clip/scripts/generate_vectors.php

This will:

- Detect resources that need new vectors (based on file_checksum)

- Send the image to the Python service

- Store the 512-dim vector in the resource_clip_vector table

If the Python script says ModuleNotFoundError: No module named 'fastapi', make sure you activated your virtual environment.

** After running generate_vectors.php the clip_service.py service must be restarted to start using the new vectors.

For the Python service, the MySQL credentials must have access to all ResourceSpace databases on the server if using a shared Python service. The calling plugin will

pass the database, ensuring multi-tenant support.

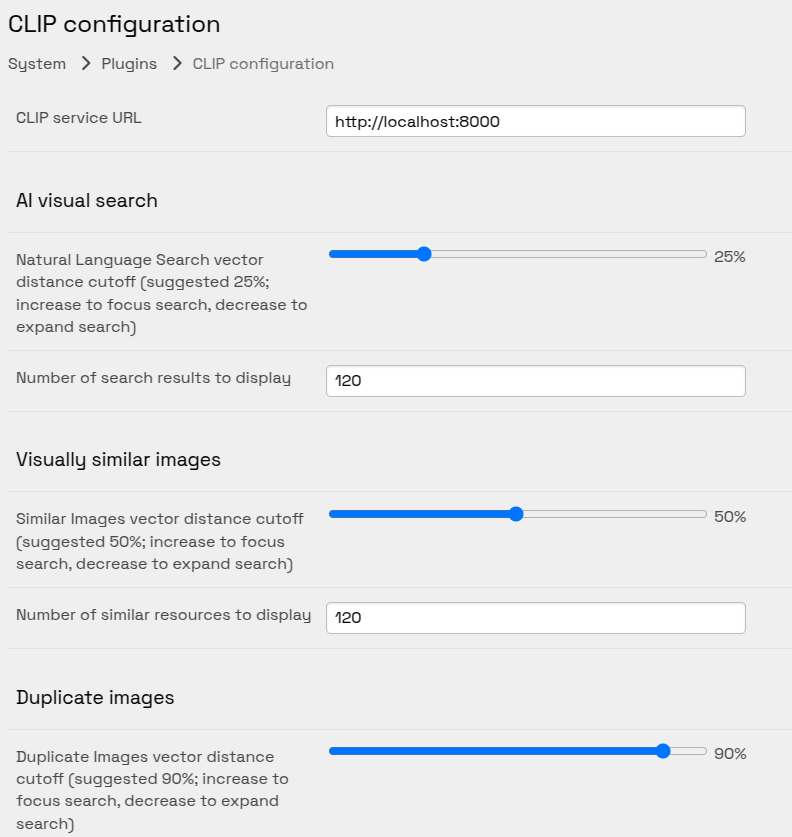

Plugin options

The plugin options allows selection of the cutoff for images deemed "similar" by the similiarty search. You can also configure the number of similar resources to fetch. Similar options exist for the natural language search.

For duplicate matching, a high threshold should be set to ensure results are duplicates. 100% will be the same image, however crops/minor edits can reduce the match while the image is essentially still the same. Some adjustment of the setting may be needed, for example if matches for similar but not actually duplicate images are being returned.

CLIP Vector Generation

Vector generation will happen automatically once the plugin is enabled if your system is set up to execute the cron script regularly. You can manually index by running the scripts in /plugins/clip/scripts/.